2. McCulloch WS, Pitts W. A logical calculus of the ideas immanent in nervous activity. 1943. Bull Math Biol 1990;52:99-115.

3. Rosenblatt F. The perceptron: a probabilistic model for information storage and organization in the brain. Psychol Rev 1958;65:386-408.

4. Hinton GE, Salakhutdinov RR. Reducing the dimensionality of data with neural networks. Science 2006;313:504-7.

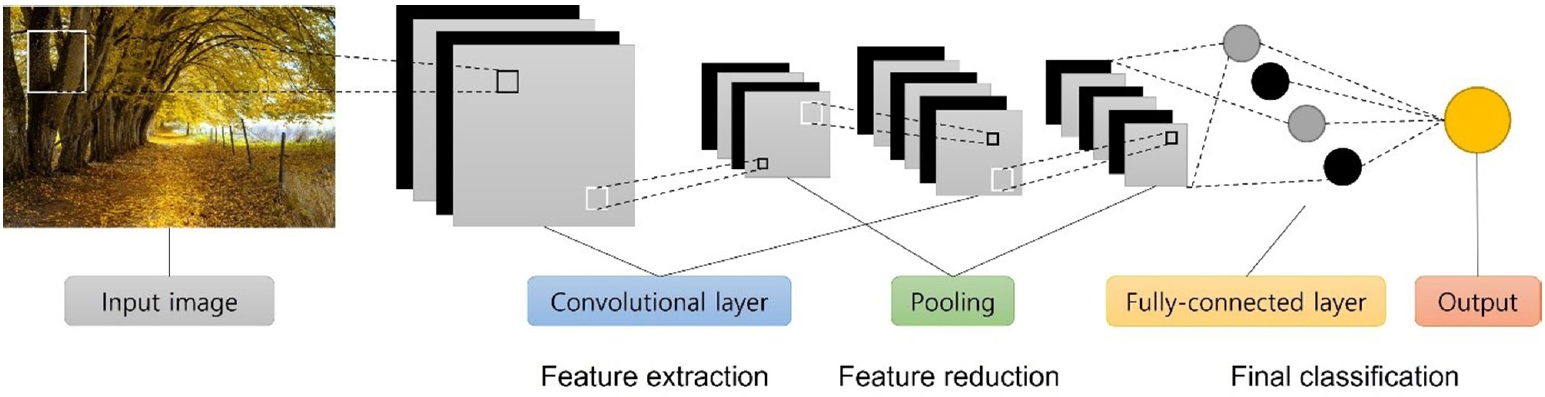

5. Anwar SM, Majid M, Qayyum A, Awais M, Alnowami M, Khan MK. Medical image analysis using convolutional neural networks: a review. J Med Syst 2018;42:226.

6. LeCun Y, Bottou L, Bengio Y, Haffner P. Gradient-based learning applied to document recognition. Proceedings of the IEEE 1998;86:2278-324.

10. Al-Helo S, Alomari RS, Ghosh S, Chaudhary V, Dhillon G, Al- Zoubi MB, et al. Compression fracture diagnosis in lumbar: a clinical CAD system. Int J Comput Assist Radiol Surg 2013;8:461-9.

11. Basha CMAKZ, Padmaja M, Balaji GN. Computer aided fracture detection system. J Med Imaging Health Inform 2018;8:526-31.

13. Gale W, Oakden-Rayner L, Carneiro G, Bradley AP, Palmer LJ. Detecting hip fractures with radiologist-level performance using deep neural networks. arXiv 1711.06504 [Preprint]. arXiv 1711.06504 [Preprint]. 2017 [cited 2021 Sep 28]

https://arxiv.org/abs/1711.06504.

14. Kim DH, MacKinnon T. Artificial intelligence in fracture detection: transfer learning from deep convolutional neural networks. Clin Radiol 2018;73:439-45.

17. Urakawa T, Tanaka Y, Goto S, Matsuzawa H, Watanabe K, Endo N. Detecting intertrochanteric hip fractures with orthopedist- level accuracy using a deep convolutional neural network. Skeletal Radiol 2019;48:239-44.

19. Bayram F, Cakıroglu M. DIFFRACT: DIaphyseal Femur FRActure Classifier SysTem. Biocybern Biomed Eng 2016;36:157-71.

20. Gulshan V, Peng L, Coram M, Stumpe MC, Wu D, Narayanaswamy A, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA 2016;316:2402-10.

22. Nam JG, Park S, Hwang EJ, Lee JH, Jin KN, Lim KY, et al. Development and validation of deep learning-based automatic detection algorithm for malignant pulmonary nodules on chest radiographs. Radiology 2019;290:218-28.

24. Luo H, Xu G, Li C, He L, Luo L, Wang Z, et al. Real-time artificial intelligence for detection of upper gastrointestinal cancer by endoscopy: a multicentre, case-control, diagnostic study. Lancet Oncol 2019;20:1645-54.

25. Nishimoto S, Sotsuka Y, Kawai K, Ishise H, Kakibuchi M. Personal computer-based cephalometric landmark detection with deep learning, using cephalograms on the internet. J Craniofac Surg 2019;30:91-5.

26. Dhar R, Falcone GJ, Chen Y, Hamzehloo A, Kirsch EP, Noche RB, et al. Deep learning for automated measurement of hemorrhage and perihematomal edema in supratentorial intracerebral hemorrhage. Stroke 2020;51:648-51.

27. Ironside N, Chen CJ, Mutasa S, Sim JL, Ding D, Marfatiah S, et al. Fully automated segmentation algorithm for perihematomal edema volumetry after spontaneous intracerebral hemorrhage. Stroke 2020;51:815-23.

28. Sharrock MF, Mould WA, Ali H, Hildreth M, Awad IA, Hanley DF, et al. 3D deep neural network segmentation of intracerebral hemorrhage: development and validation for clinical trials. Neuroinformatics 2021;19:403-15.

29. Zhao X, Chen K, Wu G, Zhang G, Zhou X, Lv C, et al. Deep learning shows good reliability for automatic segmentation and volume measurement of brain hemorrhage, intraventricular extension, and peripheral edema. Eur Radiol 2021;31:5012-20.

32. Benba A, Jilbab A, Hammouch A. Discriminating between patients with Parkinson’s and neurological diseases using cepstral analysis. IEEE Trans Neural Syst Rehabil Eng 2016;24:1100-8.

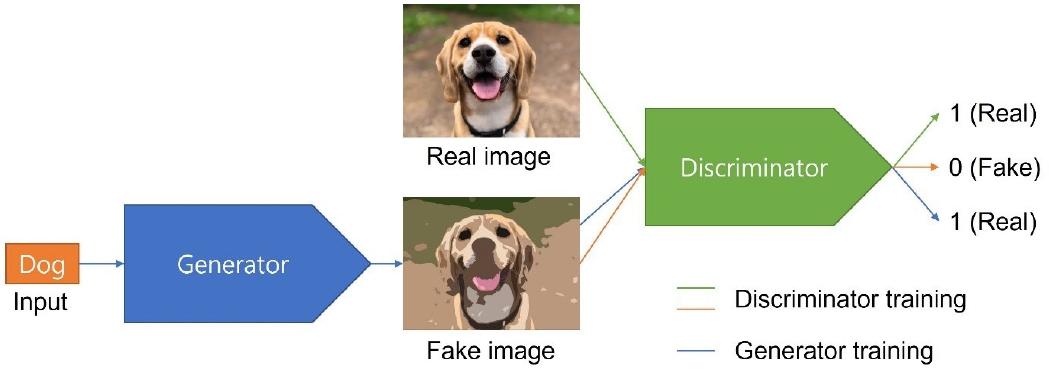

35. Goodfellow IJ, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, et al. Generative adversarial nets. In: Ghahramani Z, Welling M, Cortes C, Lawrence N, Weinberger KQ, editors. Advances in neural information processing systems 27. Red Hook: Curran; 2014. p. 2672-80.

36. Zhu JY, Park T, Isola P, Efros AA. Unpaired image-to-image translation using cycle-consistent adversarial networks. Paper presented at: 2017 IEEE International Conference on Computer Vision 2017 Oct 22-29 Venice, Italy.

37. Mirza M, Osindero S. Conditional generative adversarial nets. arXiv 1411.1784 [Preprint]. arXiv 1411.1784 [Preprint]. 2014 [cited 2021 Sep 28].

https://arxiv.org/abs/1411.1784.

38. Karras T, Aila T, Laine S, Lehtinen J. Progressive growing of GANs for improved quality, stability, and variation. arXiv 1710.10196 [Preprint]. 2018 [cited 2021 Sep 28].

https://arxiv.org/abs/1710.10196.

39. Wolterink JM, Dinkla AM, Savenije MHF, Seevinck PR, van den Berg CAT, Isgum I. Deep MR to CT synthesis using unpaired data. In: Gooya A, Frangi AF, Tsaftaris SA, Prince JL, editors. Simulation and Synthesis in Medical Imaging. Cham: Springer; 2017. p. 14-23.

42. Chang HH, Moura JMF. Biomedical signal processing. In: Kutz M, editors. Biomedical engineering and design handbook. Vol. 1. 2nd ed. New York: McGraw-Hill; 2010. p. 559-79.

44. Kwon JM, Jeon KH, Kim HM, Kim MJ, Lim S, Kim KH, et al. Deep-learning-based out-of-hospital cardiac arrest prognostic system to predict clinical outcomes. Resuscitation 2019;139:84-91.

47. Salgarello M, Pagliara D, Rossi M, Visconti G, Barone-Adesi L. Postoperative monitoring of free DIEP flap in breast reconstruction with near-infrared spectroscopy: variables affecting the regional oxygen saturation. J Reconstr Microsurg 2018;34:383-8.

48. Akita S, Mitsukawa N, Tokumoto H, Kubota Y, Kuriyama M, Sasahara Y, et al. Regional oxygen saturation index: a novel criterion for free flap assessment using tissue oximetry. Plast Reconstr Surg 2016;138:510e-518e.